Loadtesting EdgeRouter 4 in more depth

Problem statement

Previously I have load-tested a bunch of routers. A reader wrote me a nice email, complimenting on the post… and asking whether I considered tuning the EdgeRouter 4 setup:

One question I had was around the ER4 results, you commented that there was a major falloff when a threshold was hit. Your commentary actually is spot on for how the Canvium offload works - there is a tracking table for flows that can be overloaded at the default levels. Did you try increasing the size of “System → Offload → Flow Table Size” or reducing size of the Flow Lifetime?

I would imagine that is why you were able to blow out performance on the imix results as the small streams eventually starved out the Flow Table as they were shorter than the reap interval of Flow Lifetime.

More info is here: Hardware-Offloading – Section: Optional Offloading Optimizations and Testing

Answering that question with flat “no” would not be very useful, I think.

Therefore in this post I’ll try and answer the question: What happens with the loadtest when I tweak the flow lifetime and flow table size?

Test setup

I resurrected the same setup from the Loadtesting bunch of routers article1. In the interest of saving time I’ll be only using imix.py profile for all the tests.

After getting a baseline, I will use the following config command to tweak flow lifetime:

configure

# Set flow lifetime to 12s (default).

# For the test I will try 6, 1, 0.

set system offload flow-lifetime 12

commit; exit

And then I’ll use the following config command to tweak the flow table size:

configure

# Set flow table size to 8192 for mem consumption of 1-4MB (default).

# For the test I will try 65536, 524288, 4194304, 16777216.

set system offload ipv4 table-size 8192

commit; exit

Issues during the test run

Below (each per section) are issues I’ve encountered as I was running the test.

Documentation of flow-lifetime

The Unifi documentation page (linked above) says that flow-lifetime

can be 0-4294967295. That is a lie:

ubnt@EdgeRouter-4# set system offload flow-lifetime 0

must be between 1-300

So no 0 value for us. Given the lie, I have also run a test with explicit

flow lifetime of 12, to make sure that unspecified default is indeed 12.

Documentation of table-size

I might be reading the output of show ubnt offload statistics wrong:

ubnt@EdgeRouter-4:~$ show ubnt offload statistics

[...]

Forwarding cache size (IPv4)

=============================

table_size (buckets) 16384

[...]

Flow cache table size (IPv6)

=============================

table_size (buckets) 8192

[...]

but the default doesn’t seem to be 8196 for IPv4 – like the documentation says

– but rather 16384. And 8196 for IPv6.

Sigh.

Are the table sizes applied?

During the testing I was overcome by a weird feeling that the table-size

could be only adjusted during reboot2. So for one of the values (table-size = 512k)

I also rebooted the device and re-ran the test. That is marked in the results

below as try2.

But spoiler alert: no, it is likely applied at runtime, at commit time.

Results

Overall

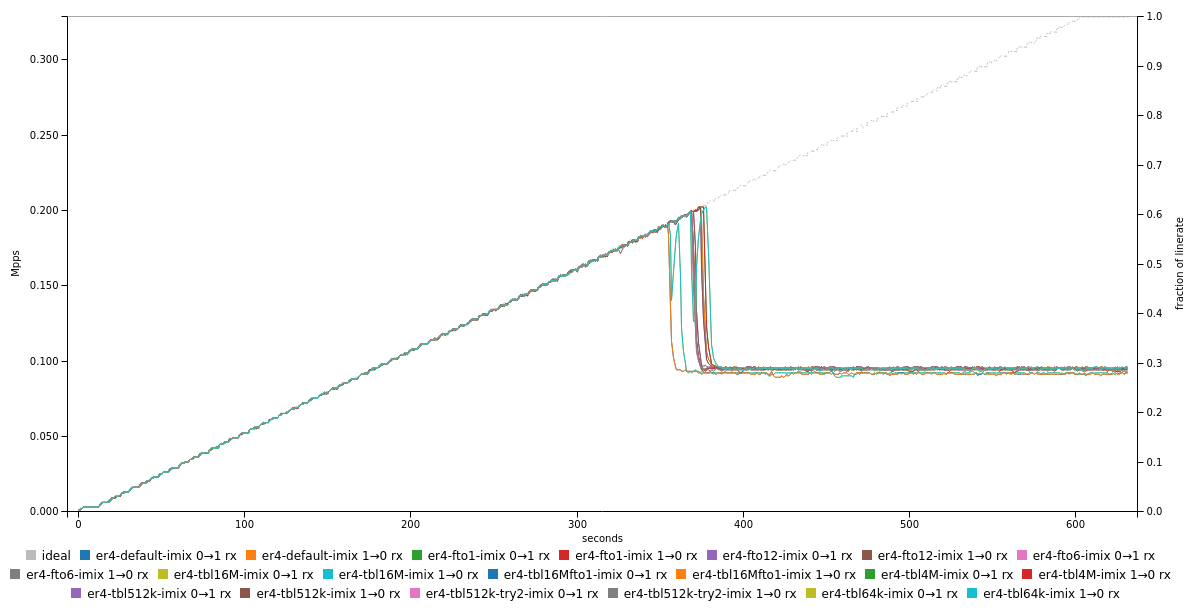

overall results; full details on loadtest results

overall results; full details on loadtest results

The ftoX suffix signifies results for flow-timeout value of X, and the

tblY suffix for table-size value of Y.

I was expecting significant delta for at least the increased table sizes. Unfortunately – as depicted above – there’s hardly any difference.

In desperation I also tried flow-lifetime = 1 and table-size = 16777216.

That’s the tbl16Mfto1. Still no luck boosting the performance significantly.

Scaled down imix

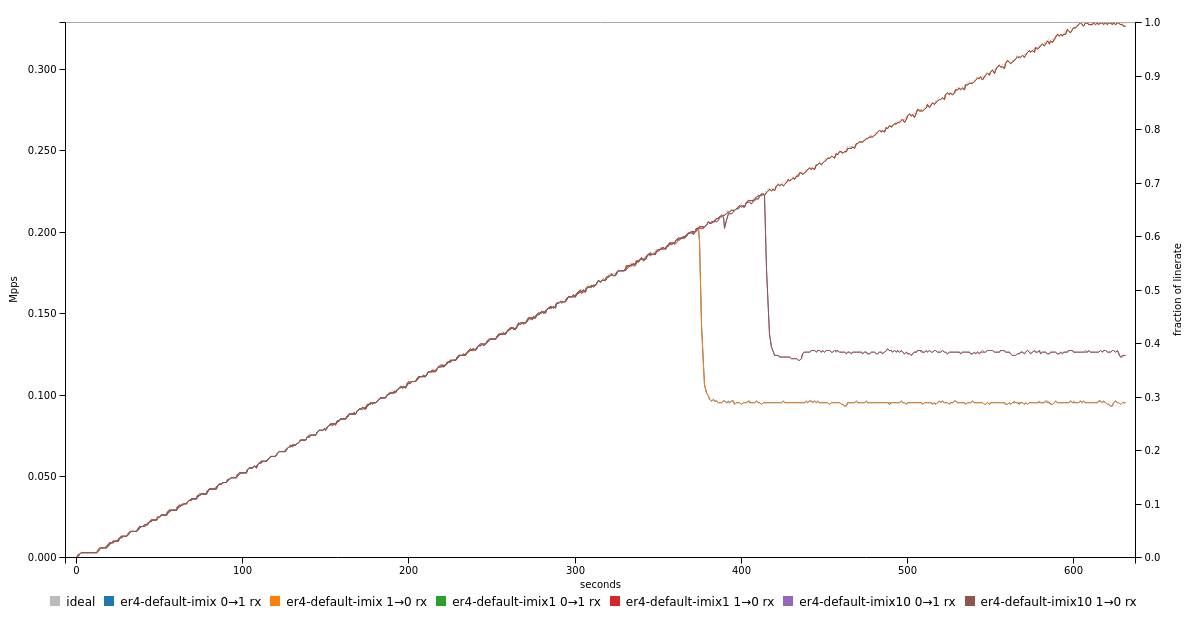

Therefore I also edited down the imix.py profile to only use smaller amount

of source IP, destination IP combos. In the graphs below imix1 profile

signifies 1 src_ip + 1 dst_ip. Similarly for imix103.

result with less flows; full details in flows – results

result with less flows; full details in flows – results

Closing words

Even though it’s very surprising, neither the table-size nor the flow-lifetime

made significant difference for imix performance on EdgeRouter 4.

The only factor making a significant difference was downscaling number of flows.

Maybe there’s something more to be tweaked in the settings, but I don’t know what that would be.

In any case, there’s some opaque bottleneck that doesn’t seem to be directly influenced by the flow table size (or the flow lifetime)4.

-

And if I were reading my own notes thoroughly, I would saved myself some initial debugging due to the missing

ip neigh add ...on the DUT. Because baseline like this is no joy. ↩ -

Which is what happens to me when I’m tweaking a value of (IMO) fundamental importance and I’m getting unexpectedly negligible delta. ↩

-

As my wife’s shirt says: “There are two kinds of people in this world: Those who can extrapolate from incomplete data” ↩

-

I think that similar issue would come up when using stateful firewall on Linux. Which would be direct analog of what the offload does. That said, I would expect netfilter to be highly responsive to

sysctltweaking. ↩