Loadtesting bunch of routers

apu6b2 under test… the spice ^W packets must flow!

apu6b2 under test… the spice ^W packets must flow!

Preface / thank you

I owe a debt of gratitude to Pim van Pelt, thanks to whom I was able to put this together in the first place. Not only was his Loadtesting at Coloclue post indispensable to get me started. He also helped me to debug my setup, answered my (often dumb) questions, and in the end… pushed me to deliver better (quality) results than I originally intended.

Thank you, Pim!

Problem statement

As mentioned in the Failing at a network loadtest (for now) post, I want to test packet forwarding performance of multiple routers/setups. In the Succeeding at a network loadtest post I gave a list. Here’s a full version of what I’ll be testing:

- Ubiquiti – EdgeRouter X SFP

- PC Engines – apu4d4 // debian

- PC Engines – apu4d4 // danos

- PC Engines – apu6b21 // debian

- PC Engines – apu6b2 // danos

- Ubiquiti – EdgeRouter 3 Lite

- Ubiquiti – EdgeRouter 4

Test setup

Profiles used

In order to get a better sense of the performance of each device, I’ll run the T-Rex loadtester with the following profiles:

- udp_1500B.py, a custom profile: single stream of 1500B UDP packets in each direction

- udp_500B.py, a custom profile: single stream of 500B UDP packets in each direction

- imix.py, a built-in “typical internet mix”, with multiple concurrent sessions

- udp_1pkt_simple_bdir.py, a built-in “64B” test (sends a single stream of minimally sized Ethernet frames in each direction)2

The custom profiles are basically modified udp_1pkt_simple_bdir.py, but with

longer payload.

The test profiles are all setup so that the flows are between 16.0.0.0/24 and

48.0.0.0/24 networks (imix) or directly between 16.0.0.1 and 48.0.0.1

hosts (rest).

Diagrams

To perform the loadtest, most of the devices under test will be setup in this fashion3:

plus on the DUT:

- static route:

16.0.0.0/8 via 100.65.1.2 - static route:

48.0.0.0/8 via 100.65.2.2

In case of the apu6b2, I will (mainly) test under a slightly different setup, namely I’ll use MC220L media converter to utilize the SFP port of the APU:

Note about the T-Rex config

It is equally important to modify the /etc/trex_cfg.yaml on the source/sink to

contain proper dest_mac entries, otherwise the setup has no chance of working

properly. Ask me how I know. :-D

Or better yet, use L3 addresses in the /etc/trex_cfg.yaml instead of L2

addresses. E.g.:

# /etc/trex_cfg.yaml using L3 addresses instead of L2 addresses

- version: 2

interfaces: ["03:00.0", "03:00.1"]

port_limit: 2

port_info:

- ip: 100.65.1.2

default_gw: 100.65.1.1

- ip: 100.65.2.2

default_gw: 100.65.2.1

Notes captured during loadtesting

Feel free to skip this section, if you don’t care about the device setup and random bits discovered during the loadtest.

EdgeRouter X SFP

Factory reset, upgraded to v2.0.9-hotfix.2, configured as:

configure

set interfaces ethernet eth2 address 100.65.1.1/24

set interfaces ethernet eth4 address 100.65.2.1/24

set protocols static route 16.0.0.0/8 next-hop 100.65.1.2

set protocols static route 48.0.0.0/8 next-hop 100.65.2.2

# more about the following below:

set system offload hwnat enable

commit; save; exit

# You should also add the following to bypass potential ARP-related issues:

sudo ip neigh add 100.65.1.2 dev eth2 lladdr 00:xx:xx:xx:xx:92 nud permanent

sudo ip neigh add 100.65.2.2 dev eth4 lladdr 00:xx:xx:xx:xx:93 nud permanent

# if either fails with "exists", then use "replace" instead of "add"

Originally I ran it with hwnat off4, but then I figured that hwnat

actually plays a non-trivial role in the router’s performance even for simple

IP forwarding. Therefore nohwnat loadtests are with hwnat off, and the

normal ones (without suffix) are with hwnat on.

Also, the piss-poor performance of imix profile (as you will later see) is due

to ER-X being unable to handle the concurrent sessions well. When I edited

imix.py down to use only 1 src ip and 1 dst ip5, the performance was

significantly better. To the point where it went 20kpps without any packet loss.

Didn’t test any further, though.

Edge Router 3 Lite

Reset to factory defaults, upgrade to v2.0.9-hotfix.2. Then:

configure

set interfaces ethernet eth1 address 100.65.1.1/24

set interfaces ethernet eth2 address 100.65.2.1/24

set protocols static route 16.0.0.0/8 next-hop 100.65.1.2

set protocols static route 48.0.0.0/8 next-hop 100.65.2.2

set system offload ipv4 forwarding enable

commit; save; exit

sudo ip neigh add 100.65.1.2 dev eth1 lladdr 00:xx:xx:xx:xx:92 nud permanent

sudo ip neigh add 100.65.2.2 dev eth2 lladdr 00:xx:xx:xx:xx:93 nud permanent

Therefore the version/offload looks like:

configuration detail (click to expand)

$ show version

Version: v2.0.9-hotfix.2

Build ID: 5402463

Build on: 05/11/21 13:17

Copyright: 2012-2020 Ubiquiti Networks, Inc.

HW model: EdgeRouter Lite 3-Port

$ show ubnt offload

IP offload module : loaded

IPv4

forwarding: enabled

vlan : disabled

pppoe : disabled

gre : disabled

bonding : disabled

IPv6

forwarding: disabled

vlan : disabled

pppoe : disabled

bonding : disabled

IPSec offload module: loaded

Traffic Analysis :

export : disabled

dpi : disabled

version : 1.564

I wasn’t present for the entirety of the loadtest (all 4 together take a bit

over 40 mins), but when I came back during imix.py loadtest, I found the

console complaining of:

octeon_ethernet 11800a0000000.pip eth2: Failed WQE allocate

octeon_ethernet 11800a0000000.pip eth2: Failed WQE allocate

octeon_ethernet 11800a0000000.pip eth1: Failed WQE allocate

octeon_ethernet 11800a0000000.pip eth1: Failed WQE allocate

octeon_ethernet 11800a0000000.pip eth1: Failed WQE allocate

That explains the result (more on that below).

EdgeRouter 4

Reset to factory defaults, upgrade to v2.0.9-hotfix.2. Then:

configure

set interfaces ethernet eth1 address 100.65.1.1/24

set interfaces ethernet eth2 address 100.65.2.1/24

set protocols static route 16.0.0.0/8 next-hop 100.65.1.2

set protocols static route 48.0.0.0/8 next-hop 100.65.2.2

set system offload ipv4 forwarding enable

commit; save; exit

sudo ip neigh add 100.65.1.2 dev eth1 lladdr 00:xx:xx:xx:xx:92 nud permanent

sudo ip neigh add 100.65.2.2 dev eth2 lladdr 00:xx:xx:xx:xx:93 nud permanent

Therefore the version/offload looks like:

configuration detail (click to expand)

$ show version

Version: v2.0.9-hotfix.2

Build ID: 5402463

Build on: 05/11/21 13:17

Copyright: 2012-2020 Ubiquiti Networks, Inc.

HW model: EdgeRouter 4

$ show ubnt offload

IP offload module : loaded

IPv4

forwarding: enabled

vlan : disabled

pppoe : disabled

gre : disabled

bonding : disabled

IPv6

forwarding: disabled

vlan : disabled

pppoe : disabled

bonding : disabled

IPSec offload module: loaded

Traffic Analysis :

export : disabled

dpi : disabled

version : 1.564

No noticeable errors on console during the test.

PC Engines – apu4d4 // debian

$ cat /etc/debian_version

10.10

$ uname -r

4.19.0-17-amd64

$ sysctl net.ipv4.ip_forward=1

net.ipv4.ip_forward = 1

$ ip link set enp2s0 up

$ ip link set enp3s0 up

$ ip a a 100.65.1.1/24 dev enp2s0

$ ip a a 100.65.2.1/24 dev enp3s0

$ ip ro a 16.0.0.0/8 via 100.65.1.2

$ ip ro a 48.0.0.0/8 via 100.65.2.2

$ ip neigh add 100.65.1.2 dev enp2s0 lladdr 00:xx:xx:xx:xx:92 nud permanent

$ ip neigh add 100.65.2.2 dev enp3s0 lladdr 00:xx:xx:xx:xx:93 nud permanent

$ iptables -F

$ iptables -X

Just a few errors in dmesg:

perf: interrupt took too long (2676 > 2500), lowering kernel.perf_event_max_sample_rate to 74500

perf: interrupt took too long (3355 > 3345), lowering kernel.perf_event_max_sample_rate to 59500

perf: interrupt took too long (4335 > 4193), lowering kernel.perf_event_max_sample_rate to 46000

perf: interrupt took too long (5474 > 5418), lowering kernel.perf_event_max_sample_rate to 36500

PC Engines – apu4d4 // danos

Booted livecd of DANOS (Inverness) 2105, logged in (tmpuser/tmppwd),

configured:

configure

set interfaces dataplane dp0p2s0 address 100.65.1.1/24

set interfaces dataplane dp0p3s0 address 100.65.2.1/24

set protocol static route 16.0.0.0/8 next-hop 100.65.1.2

set protocol static route 48.0.0.0/8 next-hop 100.65.2.2

commit; exit

I mean, I was expecting it a tad harder than this. Nope. And no issues either.

PC Engines – apu6b2 // debian

See the section below for more info on the fiber setup.

Fresh debian netinst, with almost no services (apart from ssh server). Configured:

$ cat /etc/debian_version

10.10

$ uname -r

4.19.0-17-amd64

$ sysctl net.ipv4.ip_forward=1

net.ipv4.ip_forward = 1

$ ip link set enp1s0 up

$ ip link set enp3s0 up

$ ip a a 100.65.1.1/24 dev enp1s0

$ ip a a 100.65.2.1/24 dev enp3s0

$ ip ro a 16.0.0.0/8 via 100.65.1.2

$ ip ro a 48.0.0.0/8 via 100.65.2.2

$ ip neigh add 100.65.1.2 dev enp1s0 lladdr 00:xx:xx:xx:xx:92 nud permanent

$ ip neigh add 100.65.2.2 dev enp3s0 lladdr 00:xx:xx:xx:xx:93 nud permanent

$ iptables -F

$ iptables -X

before/during the sfp-test there were some messages in dmesg that might be relevant:

igb 0000:03:00.0 enp3s0: igb: enp3s0 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: RX

igb 0000:01:00.0 enp1s0: igb: enp1s0 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: None

perf: interrupt took too long (2505 > 2500), lowering kernel.perf_event_max_sample_rate to 79750

perf: interrupt took too long (3379 > 3131), lowering kernel.perf_event_max_sample_rate to 59000

perf: interrupt took too long (4262 > 4223), lowering kernel.perf_event_max_sample_rate to 46750

perf: interrupt took too long (5377 > 5327), lowering kernel.perf_event_max_sample_rate to 37000

And for the copper setup6:

$ sysctl net.ipv4.ip_forward=1

net.ipv4.ip_forward = 1

$ ip a fl dev enp1s0

$ ip a fl dev enp2s0

$ ip a fl dev enp3s0

$ ip link set enp1s0 down

$ ip link set enp2s0 up

$ ip link set enp3s0 up

$ ip a a 100.65.1.1/24 dev enp2s0

$ ip a a 100.65.2.1/24 dev enp3s0

$ ip ro a 16.0.0.0/8 via 100.65.1.2

$ ip ro a 48.0.0.0/8 via 100.65.2.2

$ ip neigh add 100.65.1.2 dev enp2s0 lladdr 00:xx:xx:xx:xx:92 nud permanent

$ ip neigh add 100.65.2.2 dev enp3s0 lladdr 00:xx:xx:xx:xx:93 nud permanent

$ iptables -F

$ iptables -X

before/during the copper-test:

igb 0000:02:00.0 enp2s0: igb: enp2s0 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: RX

igb 0000:03:00.0 enp3s0: igb: enp3s0 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: RX

(notice how both ports have negotiated flow control, whereas the fiber port in the sfp-test output had FC: None)

PC Engines – apu6b2 // danos

As described above in the “Test overview”, the APU is connected to my

source/sink using a TP-Link MC220L media converter. As the optical path I’m

using the Unifi UF-SM-1G-S (singlemode 1310/1550 + 1550/1310) mini GBICs

with a 5m singlemode cable. It’s sort of apparent from the picture at the

top of the page. :)

And as a burn test I basically blasted 100% of linerate of udp_1500B.py

for a few mins, just to see that there are no obvious drops. All nominal.

Booted livecd of DANOS (Inverness) 2105, logged in (tmpuser/tmppwd),

configured:

configure

# dp0p1s0 is the SFP port

set interfaces dataplane dp0p1s0 address 100.65.1.1/24

# dp0p3s0 is the second (middle) copper port

set interfaces dataplane dp0p3s0 address 100.65.2.1/24

set protocol static route 16.0.0.0/8 next-hop 100.65.1.2

set protocol static route 48.0.0.0/8 next-hop 100.65.2.2

commit; exit

For a more in-depth look at the apu6, I suggest checking Pim’s PCEngines with SFP article he posted today.

Results

You can find all the relevant details graphed on the Loadtest results page7 (or the per-device result pages: apu4, apu6, ER-X, ER3, ER4).

And the raw json output from all of the loadtests is also available.

I’ll break the results down:

While all of the profiles tested provide some signal, I think the two most important ended up being:

udp_1pkt_simple_bdir.py(64B), answering the question: how many raw packets per second can this device actually push?imix.py, answering the question: does it suck routing multiple streams?

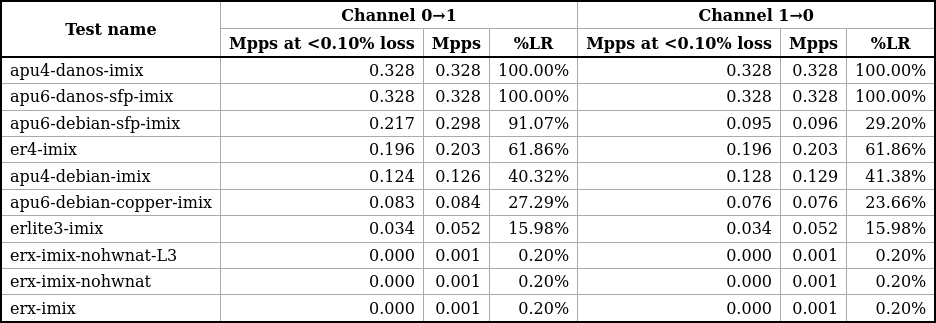

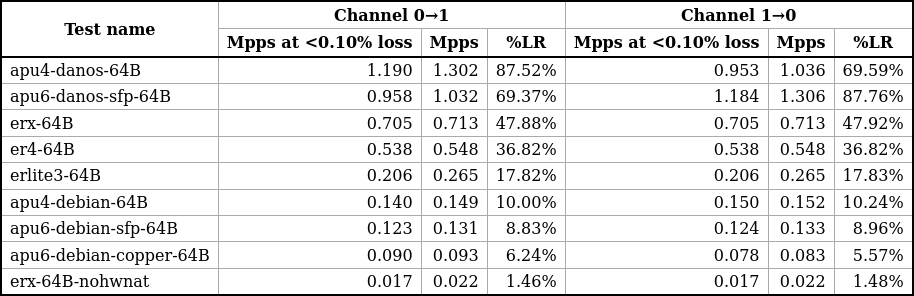

What follows are tables showing max packets-per-second (pps) performance while not suffering substantial loss, max achieved pps performance, and what the max pps performance equals to in terms of percent of linerate. All broken per “channel” (direction).

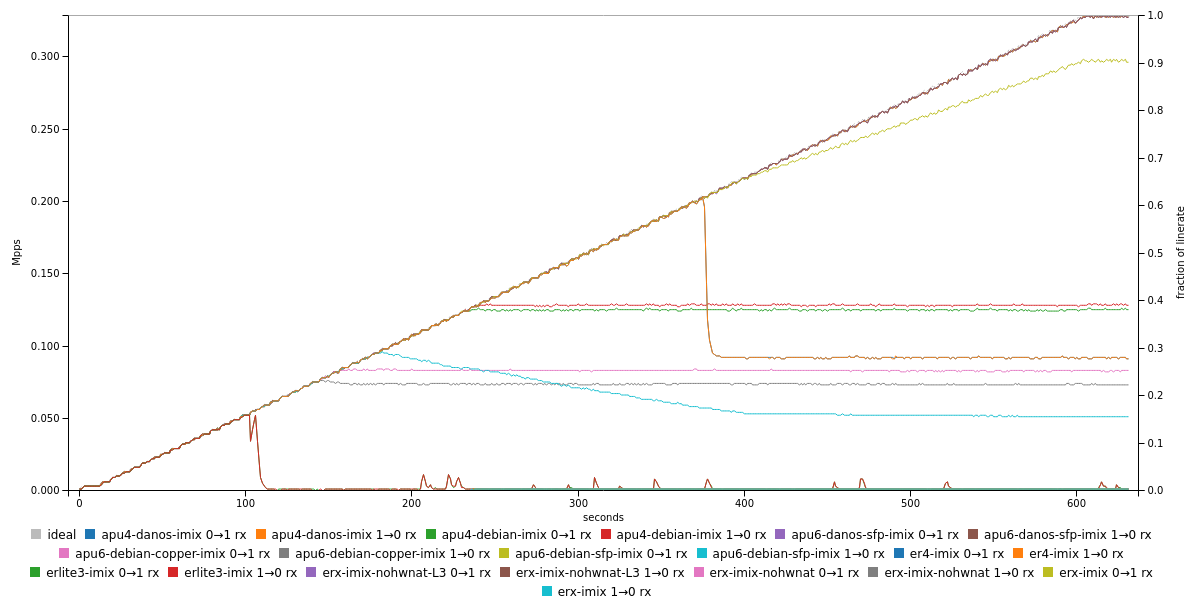

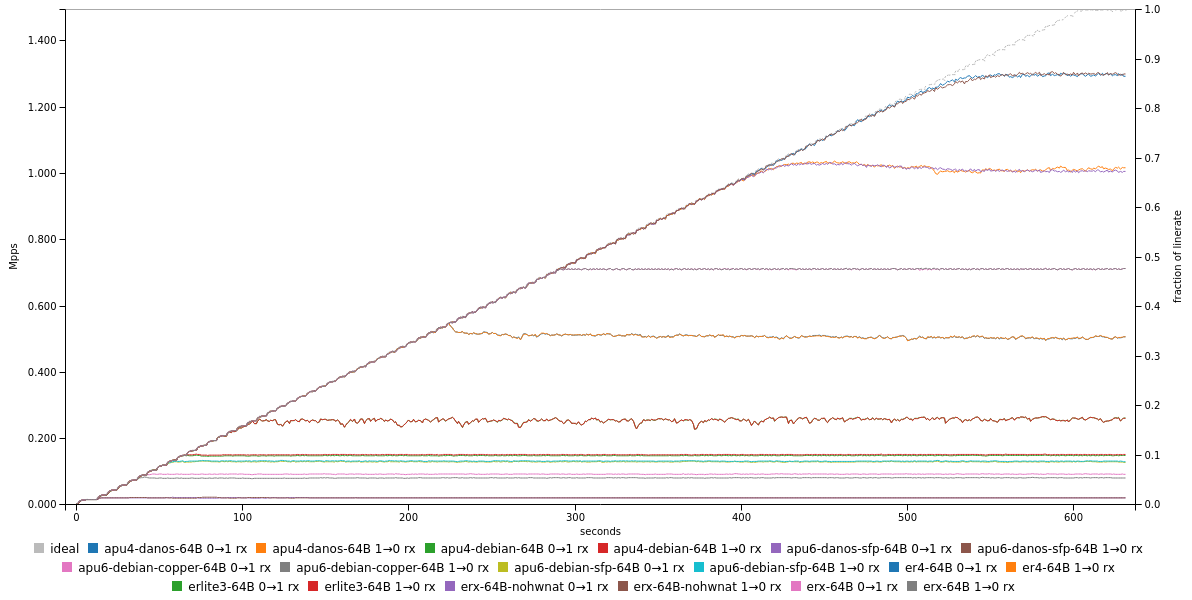

The graph then shows progress of the loadtest (pps vs time, when the transmit rate slowly ramps up).

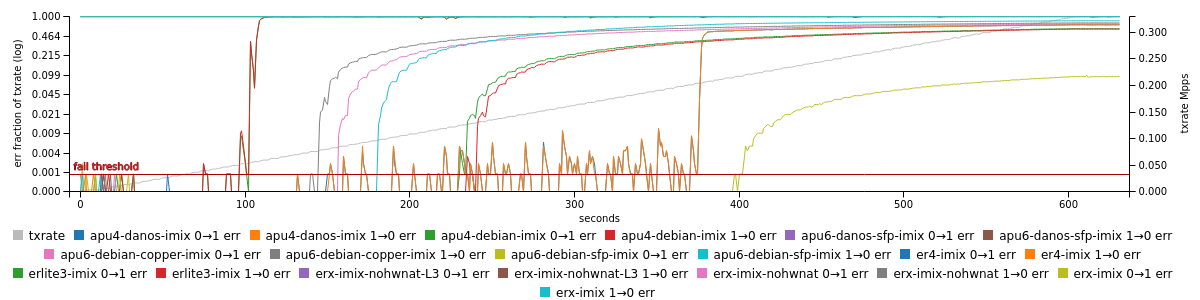

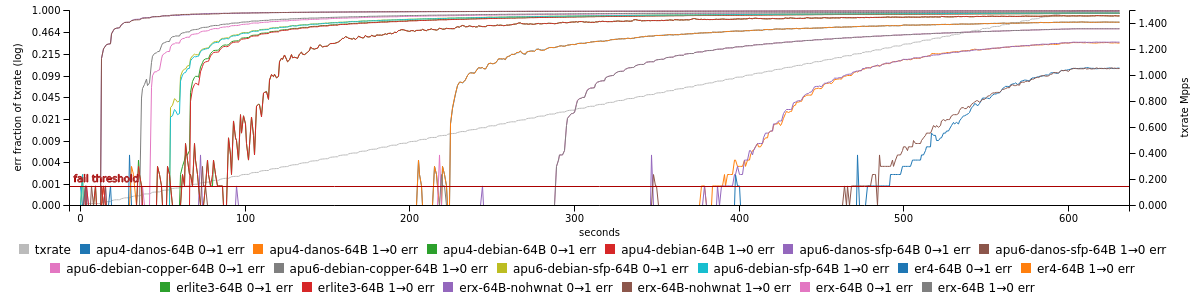

And the error graph shows similar value, but broken down as a logarithm of the loss-rate/transmit-rate (y axis) and transmit-rate itself (y2 axis).

Of course, for full (and interactive) results you have to visit one of the result pages linked above.

Results for imix

imix results; full details in loadtest results

imix results; full details in loadtest results

imix graph; full details in loadtest results

imix graph; full details in loadtest results

imix errors graph; full details in loadtest results

imix errors graph; full details in loadtest results

Results for 64B

64B results; full details in loadtest results

64B results; full details in loadtest results

64B graph; full details in loadtest results

64B graph; full details in loadtest results

64B errors graph; full details in loadtest results

64B errors graph; full details in loadtest results

Commentary of the results

My takeaways:

- ER-X is hopelessly weak, to the point of failing to route single stream in each direction at linerate. But that is hardly surprising.

- Danos, no matter the hardware, is a clear winner: achieving linerate for all but 64B profile (where it does respectable 70-80%)

- EdgeRouter 4 is no slouch (linerate for 500B, 48% of linerate for 64B), but the imix profile result is a disaster. Inspect carefully the results page. I would guess that the multiple parallel flows overwhelm some table that takes care of the offloading, and then the performance falls off the cliff8.

- EdgeRouter Lite 3 is okay for big to mid packets (nearly linerate for 500B), but rubbish for both 64B and imix.

- Debian (read as: normal Linux distro without DPDK) on APUs is a bad idea, easily beat by erlite3 in all but imix ;)

Closing words

There you have it. I jokingly said that from now on I’ll be teasing people who only ever test their setup with iperf3. And I suspect I will.

Don’t get me wrong, iperf3 is a decent tool, and I’ve used it a lot in the past. Unfortunately it pales in comparison with Cisco’s T-Rex.

And if you’re morbidly curious about your own router / network performance, I think you could do a lot worse than taking T-Rex for a spin. Because the way in which T-Rex is versatile, you can basically test anything from your local piddly router… all the way to a multi-country network ring9.

Btw, for me the results speak clearly: PCEngines APU with Danos is the way to go. Anyone wants to buy some Ubiquiti routers on the cheap? :)

-

The apu6b2 is a pre-prod version of apu6b4 with the only diff that it has 2GB RAM, not 4GB. ↩

-

Off-topic, I had trouble figuring out why it’s “64B” when the packet in question is 28+10 (plus Ethernet frame), and as Pim pointed out, Ethernet frames have a min size of 64B, so they pad packets that would be too short. That also means, that the 1500B UDP are in fact bigger than that (at L1). Naming is hard. ↩

-

And I’ll freely admit that I cribbed parts of the test layout – as well as the 100.64.0.0/10 – from Pim’s notes. ↩

-

Since, duh, I’m not doing any NAT, am I? ↩

-

My original suspicion was that it is related to the choice of src/dst ports, because T-Rex uses 53 (dns) in

imix.py. That turned out to be a red herring. ↩ -

Originally that wasn’t planned. But seeing the

apu6-debian-sfpweirdness on theimixprofile made me reconsider. ↩ -

Beware: The loadtest results are Javascript heavy (especially the full last), and lightyears from mobile friendly. ↩

-

Update 2021-11-21: I have posted a slightly more thorough loadtest of the EdgeRouter 4. ↩

-

By way of “completely random” example, say: IPng’s European ring? :) ↩